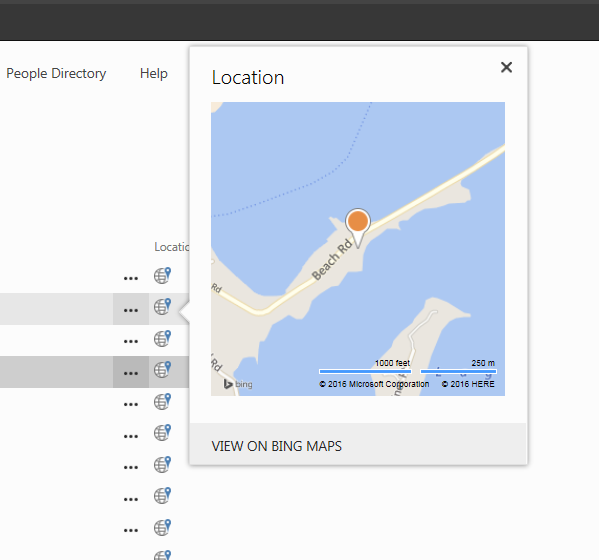

Microsoft introduces a new type of list field named “geography” which allows you to add a longitude and latitude to your records. Lists that capture office information, delivery locations or even employee directories for multi-state or global companies can have this type of field added. Once the information is in, a simple Bing map view will allow users to look at geographical representations of their data.

However, currently, this feature is hidden in both Office 365 and SharePoint 2013.

For on-premises installations, your admin needs to run an executable available on the Microsoft site (SQLSysClrTypes.msi).

For Office 365, there are a couple of steps you need to execute to enjoy this exciting new feature:

- Register with Bing maps to get a key for your map views

- Add a new field of type, “geography” to the list

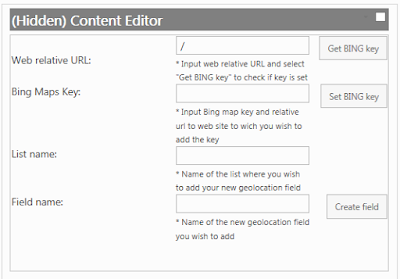

- Since this type is hidden, you can use PowerShell or JavaScript to create it (Reference this script)

The script creates a friendly input form that you can even leave hidden on the page for future use - Make sure you specify the Bing maps key in the textbox

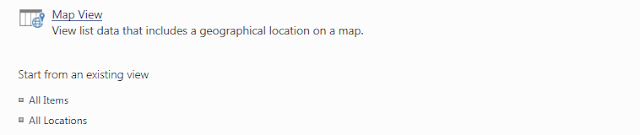

- Create a new view of type, “Map view”

If you have performed the steps above successfully, you should be able to see a full map with all locations on it, like on the screenshot below!